Nano Banana Drives a Surge in AI-Powered Creativity

Google DeepMind’s latest image model, Nano Banana, has rapidly become a centerpiece of the Gemini app, with more than 5 billion images generated since its introduction in late August 2025. The model, designed for both image generation and editing, is now integrated into the Gemini app’s Canvas tool and Google AI Studio, empowering users to create, modify, and blend images with unprecedented precision and ease.

“It’s a giant quality leap, especially for image editing,” said Nicole Brichtova, Nano Banana’s product lead. The model’s multimodal capabilities—processing both text and images simultaneously—enable a new level of creative control. Users can make subtle adjustments, such as changing the color of a sofa or editing a person’s outfit, without disturbing the rest of the scene. This “pixel-perfect editing” is a key feature, allowing for granular changes that previously required specialized tools.

Consistency and Context: Key Innovations

Nano Banana stands out for its ability to maintain scene and character consistency across multiple edits and generations. Whether altering a subject’s pose, outfit, or even the lighting and angle, the model preserves individual likenesses—a capability that addresses a longstanding challenge in AI image editing. “We’ve progressed from something that looks like your AI distant cousin to images that look like you,” said David Sharon, Gemini App Product Manager, referencing the improvements in the underlying Gemini 2.5 Flash Image technology.

The model’s contextual awareness also means it can “remember” previous images in a conversation, enabling iterative workflows. Users can build up complex scenes step by step—such as furnishing a room or transforming a sketch into a photorealistic image—without needing to repeat or over-specify their instructions. Nano Banana leverages its understanding of real-world knowledge to interpret vague or high-level prompts, applying logical reasoning to fill in gaps.

Creative Use Cases and App Integration

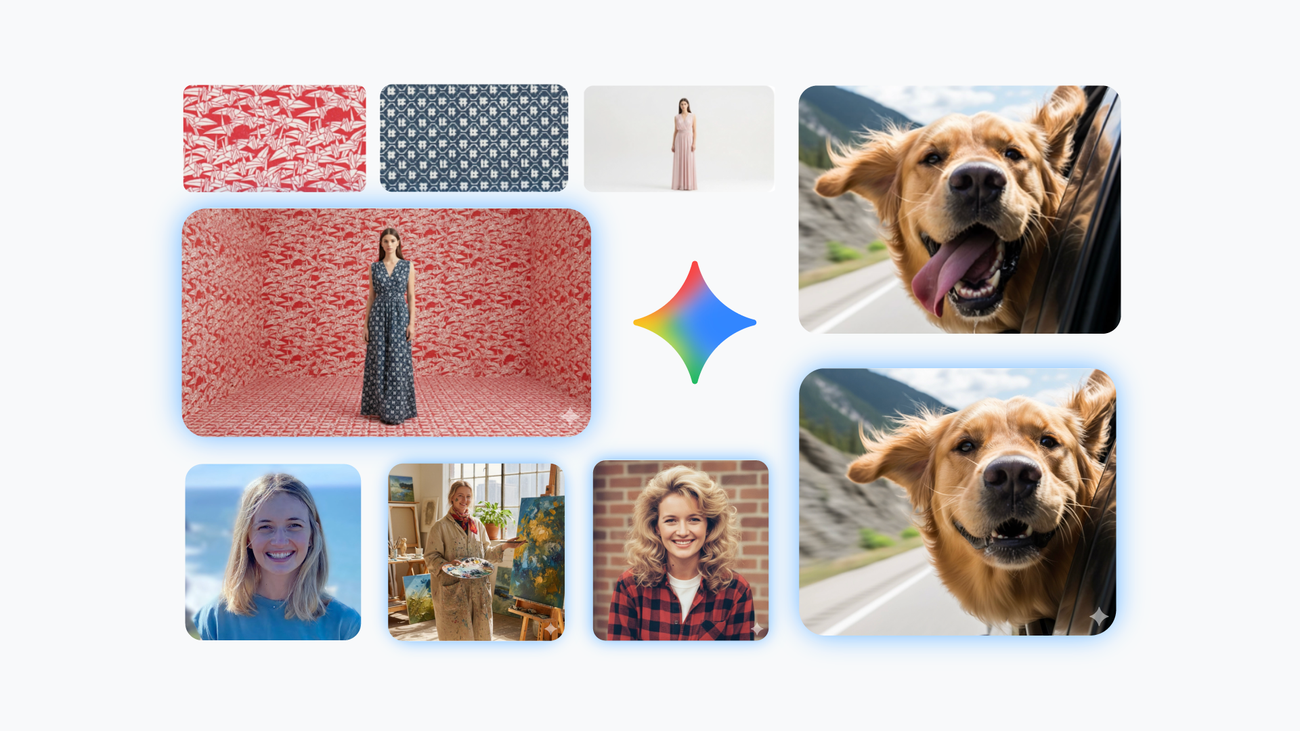

Since launch, Nano Banana has inspired a wave of inventive applications. One trend involves turning personal photos into figurines with a single prompt. The model also supports merging up to three images, allowing users to blend elements, restore old photos, or remix creative ideas.

The technology underpins new app experiences within the Gemini ecosystem. For example, the “PictureMe” template in Canvas lets users upload a single photo and instantly generate themed variations—such as ’80s mall portraits or professional headshots—across six different styles. This functionality, developed as an internal passion project by Google staff, demonstrates the model’s versatility and ease of integration for both consumers and developers.

Nano Banana’s capabilities extend to UI mockups and design workflows as well. Users can upload interface drafts and make targeted adjustments—like resizing a logo or changing a button color—without affecting the overall layout, streamlining rapid prototyping and iteration.

Global Adoption and Ongoing Development

The response to Nano Banana has been overwhelmingly positive, according to Google. The model’s accessibility and accuracy have “unleashed human creativity at scale,” said Sharon. The development team is already working on further enhancements, building on the momentum from global adoption and diverse use cases.

With its native multimodal architecture, scene consistency, and context-aware editing, Nano Banana is setting a new benchmark for AI-driven image creation and modification. As the technology evolves, it is poised to expand creative possibilities for both everyday users and professional developers across the Google DeepMind, Gemini app, and broader AI ecosystem.